ATS Evaluation Service

Purpose and Scope

The ATS Evaluation Service scores resumes against job descriptions to predict how well they will perform in Applicant Tracking Systems. The service analyzes resume-JD compatibility, provides numerical scores (0-100), and generates actionable improvement suggestions. It leverages Google Gemini 2.0 Flash through a LangGraph state machine to perform semantic matching, keyword analysis, and company-specific tailoring recommendations.

For resume analysis and classification, see Resume Analysis Service. For generating tailored resumes based on ATS feedback, see Tailored Resume Service.

Service Overview

The ATS Evaluation Service operates as a two-layer architecture:

- Service Layer (backend/app/services/ats.py) - Validates inputs, handles JD retrieval from URLs, and normalizes outputs

- Evaluator Layer (backend/app/services/ats_evaluator/graph.py) - Executes LangGraph workflow with LLM and optional web research tools

The service accepts either raw job description text or a URL, fetches company website content for context, and returns a structured evaluation with score justification and improvement recommendations.

Sources: backend/app/services/ats.py1-214 backend/app/services/ats_evaluator/graph.py1-213

Data Models

Input Models

The service uses two request models depending on the calling context:

JDEvaluatorRequest

Primary request model used internally by the service layer:

| Field | Type | Required | Description |

|---|---|---|---|

company_name |

Optional[str] |

No | Company name for context |

company_website_content |

Optional[str] |

No | Pre-fetched website content |

jd |

str |

Yes | Job description text (min 1 char) |

jd_link |

Optional[str] |

No | Alternative to jd text |

resume |

str |

Yes | Resume text (min 1 char) |

ATSEvaluationRequest

Alternative request model for API endpoints:

| Field | Type | Required | Description |

|---|---|---|---|

resume_text |

str |

Yes | Full resume text |

jd_text |

str |

Yes | Job description text |

company_name |

Optional[str] |

No | Company name |

company_website |

Optional[str] |

No | Company website URL |

Sources: backend/app/models/schemas.py475-486 backend/app/models/schemas.py460-465

Output Models

JDEvaluatorResponse

Structured response returned by the service:

| Field | Type | Description |

|---|---|---|

success |

bool |

Operation success flag (default: True) |

message |

str |

Human-readable status message |

score |

int |

ATS compatibility score (0-100) |

reasons_for_the_score |

List[str] |

Justifications for the assigned score |

suggestions |

List[str] |

Actionable improvement recommendations |

The service normalizes all output to match this schema, handling edge cases like unparseable JSON or missing fields.

Sources: backend/app/models/schemas.py488-499

Service Architecture

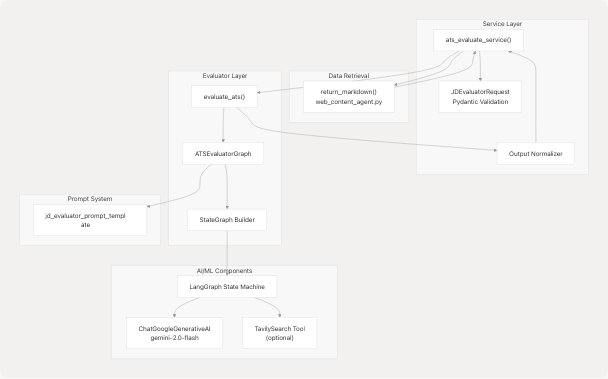

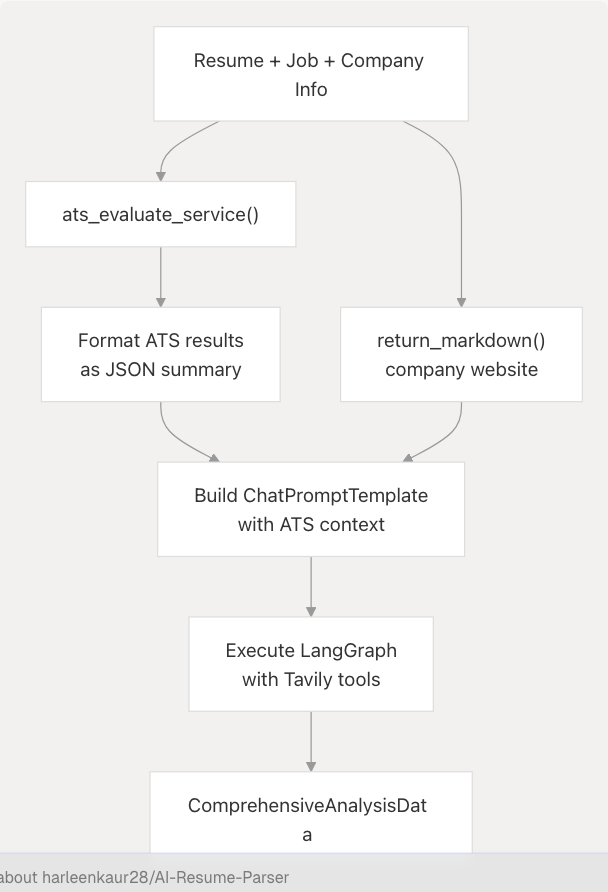

Component Diagram

Sources: backend/app/services/ats.py24-192 backend/app/services/ats_evaluator/graph.py42-120

Service Layer Implementation

The ats_evaluate_service() function orchestrates the evaluation workflow:

- JD Retrieval - If

jd_linkis provided instead ofjd_text, fetches content viareturn_markdown()from the web content agent - Validation - Constructs

JDEvaluatorRequestto validate all inputs using Pydantic - Evaluation - Calls

evaluate_ats()with normalized inputs - Normalization - Parses JSON output, handles malformed responses, and ensures all required fields exist

Sources: backend/app/services/ats.py24-113 backend/app/services/ats.py142-191

LangGraph State Machine Implementation

ATSEvaluatorGraph Class

The ATSEvaluatorGraph class in backend/app/services/ats_evaluator/graph.py48-120 encapsulates the LangGraph workflow:

Constructor Parameters:

resume_text: str- Full resume contentjd_text: str- Job descriptioncompany_name: str | None- Company name for researchcompany_website: str | None- Website URL to fetch viareturn_markdown()llm: ChatGoogleGenerativeAI | None- Optional LLM instance (uses sharedapp.core.llm.llmby default)config: GraphConfig | None- Model configuration (default:MODEL_NAME, temp 0.1)

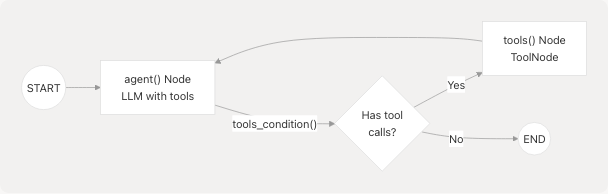

Graph Architecture

Sources: backend/app/services/ats_evaluator/graph.py98-116

Tool Integration

The graph conditionally binds TavilySearch if TAVILY_API_KEY is configured:

def _try_init_tavily() -> list:

try:

from langchain_tavily import TavilySearch

return [TavilySearch(max_results=3, topic="general")]

except Exception:

return []

Tools are bound to the LLM using llm.bind_tools(tools=self.tools), enabling the model to request web searches for company research during evaluation.

Sources: backend/app/services/ats_evaluator/graph.py27-40 backend/app/services/ats_evaluator/graph.py73-80

Agent Node Function

The agent() method processes messages through the LLM:

def agent(self, state: MessagesState):

msgs = state["messages"]

inp = [*self.system_prompt] + msgs

response = self.llm_with_tools.invoke(inp)

return {"messages": [response]}

The system prompt is constructed from jd_evaluator_prompt_template with:

- Resume text

- Job description

- Company name (or "the company")

- Company website content (fetched via

return_markdown())

Sources: backend/app/services/ats_evaluator/graph.py92-96 backend/app/services/ats_evaluator/graph.py82-89

Evaluation Workflow

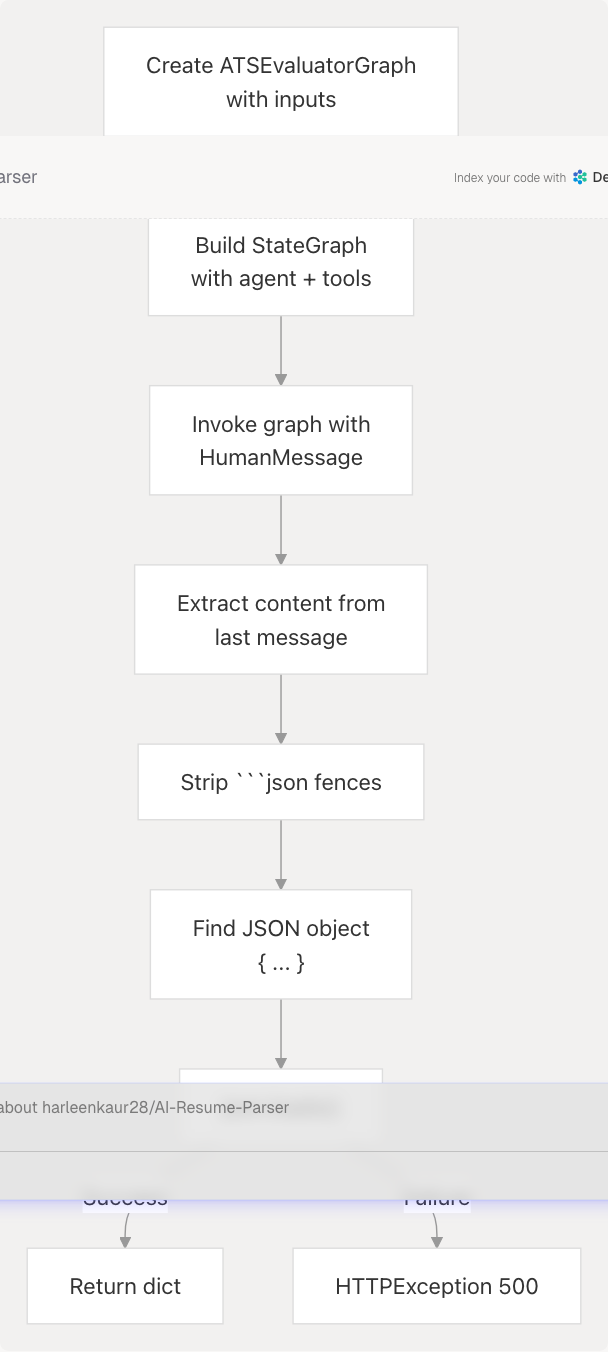

evaluate_ats() Function

The evaluate_ats() function executes the graph and parses the JSON output:

Sources: backend/app/services/ats_evaluator/graph.py122-207

JSON Parsing Logic

The function handles multiple output formats:

- Code Fence Removal - Strips

json` andmarkers using regex - JSON Extraction - Locates first

{and last}to isolate JSON object - Fallback Parsing - If initial parse fails, attempts to parse substring between braces

- Error Handling - Raises

HTTPExceptionwith status 500 if JSON is unparseable

# Strip code fences

code_fence_pattern = re.compile(r"^```(json)?\n", re.IGNORECASE)

content_str = code_fence_pattern.sub("", content_str)

if content_str.endswith("```"):

content_str = content_str[:content_str.rfind("```")]

# Find and parse JSON

if content_str.startswith("{"):

json_obj = json.loads(content_str)

else:

start = content_str.find("{")

end = content_str.rfind("}")

json_obj = json.loads(content_str[start:end+1])

Sources: backend/app/services/ats_evaluator/graph.py153-204

Integration with Other Services

Used By Tailored Resume Service

The Tailored Resume Service (backend/app/services/tailored_resume.py) calls ats_evaluate_service() to get ATS feedback before generating optimized resumes:

ats_result = await ats_evaluate_service(

resume_text=resume,

jd_text=jd,

jd_link=None,

company_name=company_name,

company_website=company_website,

)

The ATS score and suggestions are then passed to the resume generation prompt to guide improvements.

Sources: backend/app/services/resume_generator/graph.py87-113

Used By Resume Pipeline

The run_resume_pipeline() function in backend/app/services/resume_generator/graph.py72-235 integrates ATS evaluation into the tailored resume workflow:

Sources: backend/app/services/resume_generator/graph.py86-149

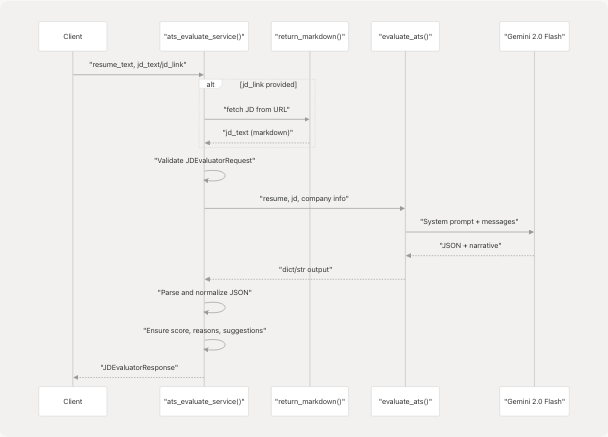

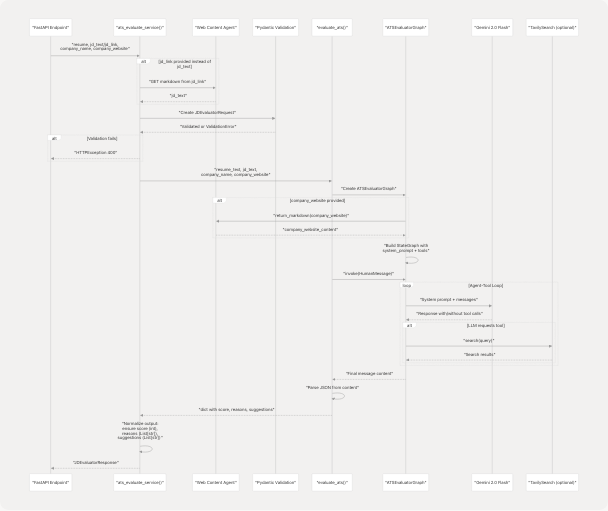

Request/Response Flow

Full End-to-End Flow

Sources: backend/app/services/ats.py24-113 backend/app/services/ats_evaluator/graph.py122-207

Error Handling and Normalization

Service Layer Error Handling

The service implements comprehensive error handling at multiple levels:

JD Retrieval Errors

try:

jd_text = web_agent.return_markdown(jd_link)

except Exception as retrieval_error:

logger.exception("Failed to fetch job description from link")

raise HTTPException(

status_code=500,

detail="Failed to retrieve job description from link.",

)

Sources: backend/app/services/ats.py46-60

Validation Errors

try:

JDEvaluatorRequest(

company_name=company_name,

company_website_content=company_website,

jd=jd_text,

resume=resume_text,

)

except ValidationError as ve:

logger.warning("ATS evaluation validation error")

raise HTTPException(status_code=400, detail=str(ve))

Sources: backend/app/services/ats.py78-97

Output Normalization

The service normalizes the raw evaluator output to ensure JDEvaluatorResponse compliance:

| Field | Normalization Logic |

|---|---|

success |

bool(analysis_json.get("success", True)) |

message |

analysis_json.get("message", "") or "" - ensures non-None string |

score |

int(analysis_json.get("score", 0)) with exception handling → defaults to 0 |

reasons_for_the_score |

Ensures List[str] - converts single values to list, stringifies all items |

suggestions |

Ensures List[str] - converts single values to list, stringifies all items |

try:

score = int(analysis_json.get("score", 0))

except Exception:

score = 0

raw_reasons = analysis_json.get("reasons_for_the_score", []) or []

if isinstance(raw_reasons, list):

reasons_for_the_score = [str(r) for r in raw_reasons]

else:

reasons_for_the_score = [str(raw_reasons)]

Sources: backend/app/services/ats.py142-180

Logging

The service includes structured logging at key checkpoints:

- Entry:

logger.debug("Starting ATS evaluation")with company name and JD source flags - Invocation:

logger.debug("Invoking ATS evaluator graph") - Raw Output:

logger.debug("ATS evaluator raw output")with output type - Normalized Payload:

logger.debug("ATS evaluator normalized payload")with all response fields - Completion:

logger.debug("ATS evaluation completed")with final score and success flag - Errors:

logger.exception()for retrieval failures, validation errors, and general exceptions

Sources: backend/app/services/ats.py33-40 backend/app/services/ats.py100-189

Configuration

Required Environment Variables

| Variable | Purpose | Used By |

|---|---|---|

GOOGLE_API_KEY |

Google Generative AI authentication | ChatGoogleGenerativeAI LLM initialization |

TAVILY_API_KEY |

Tavily Search API (optional) | TavilySearch tool for web research |

Model Configuration

The ATS evaluator uses GraphConfig dataclass with defaults:

@dataclass

class GraphConfig:

model: str = MODEL_NAME # "gemini-2.0-flash-exp" from app.core.llm

temperature: float = 0.1

Low temperature (0.1) ensures deterministic, factual outputs suitable for scoring.

Sources: backend/app/services/ats_evaluator/graph.py42-46 backend/app/core/llm.py

Usage Example

Typical Service Call

from app.services.ats import ats_evaluate_service

result = await ats_evaluate_service(

resume_text="John Doe\nSoftware Engineer...",

jd_text="We are seeking a Senior Python Developer...",

company_name="Acme Corp",

company_website="https://acme.example.com"

)

print(f"ATS Score: {result.score}/100")

print(f"Reasons: {result.reasons_for_the_score}")

print(f"Suggestions: {result.suggestions}")

Alternative with JD Link

result = await ats_evaluate_service(

resume_text=resume,

jd_text=None, # Not provided

jd_link="https://jobs.example.com/posting/12345",

company_name="Example Inc",

company_website=None

)

The service automatically fetches the JD content from the link using the web content agent.

Sources: backend/app/services/ats.py24-30